# mke2fs -j /dev/sdb7

# ip addr add 192.168.1.20/24 dev eth0

# mount -t ext3 /dev/sdb7 /mnt/oracle

# chown oracle.oracle /mnt/oracle

The Oracle installer must be run as the Oracle user. I had to add

-ignoreSysPrereqs in order to allow the installer to run on

RHEL5.

# su - oracle

$ cd /mnt/oracle_install

$ export ORACLE_HOSTNAME=svc0.foo.test.com

$ ./runInstaller OUI_HOSTNAME=svc0.foo.test.com -ignoreSysPrereqs

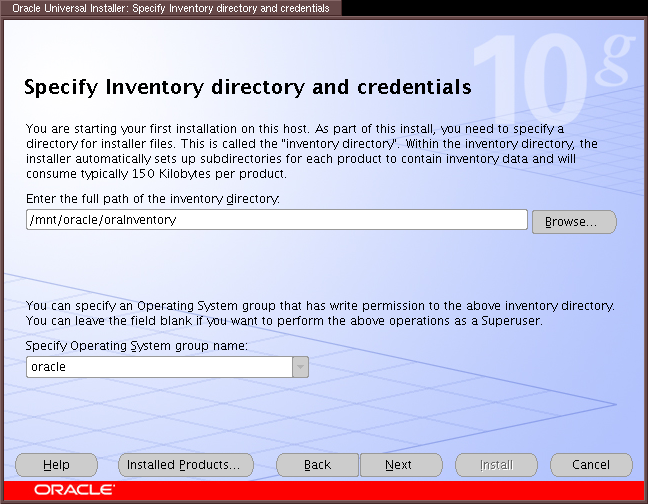

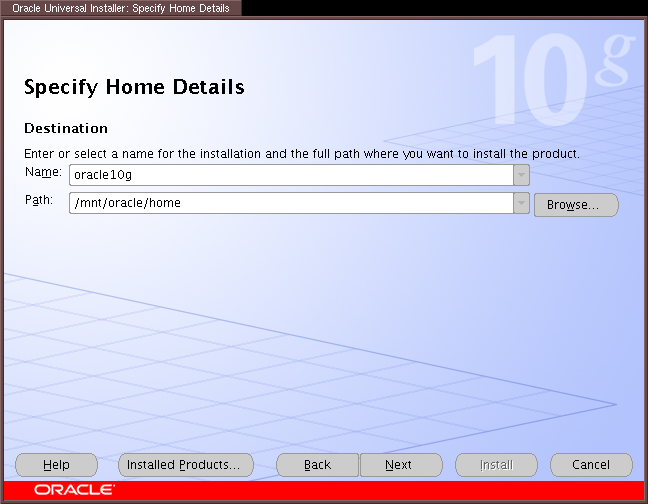

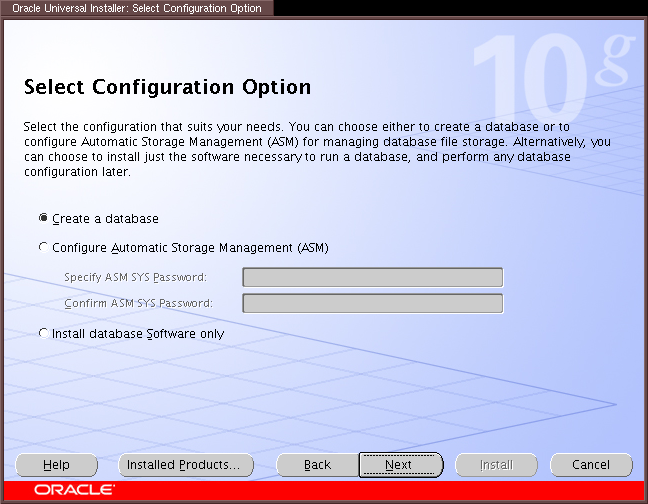

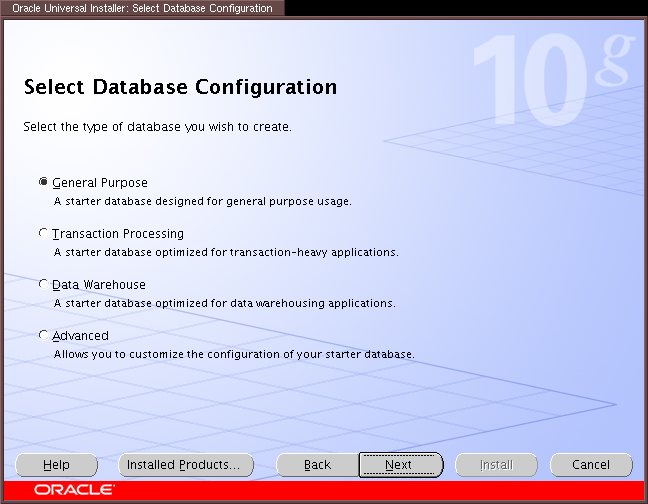

Here are some screenshots to give you an idea of how I installed it.

Install everything on shared storage - even the oraInventory parts

I used /mnt/oracle/home as my Oracle Home Directory

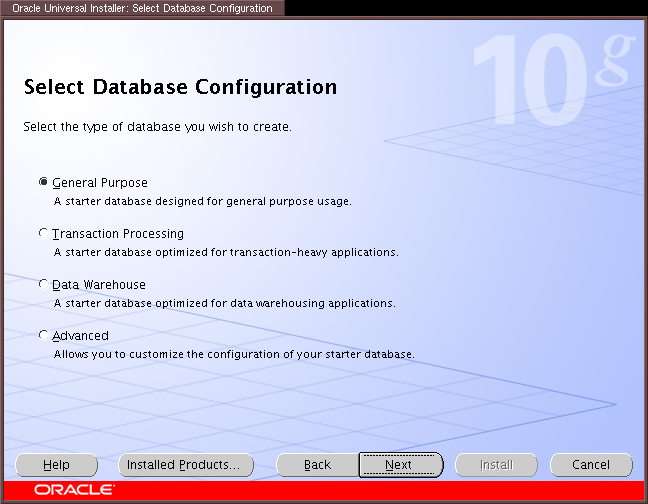

Defaults.

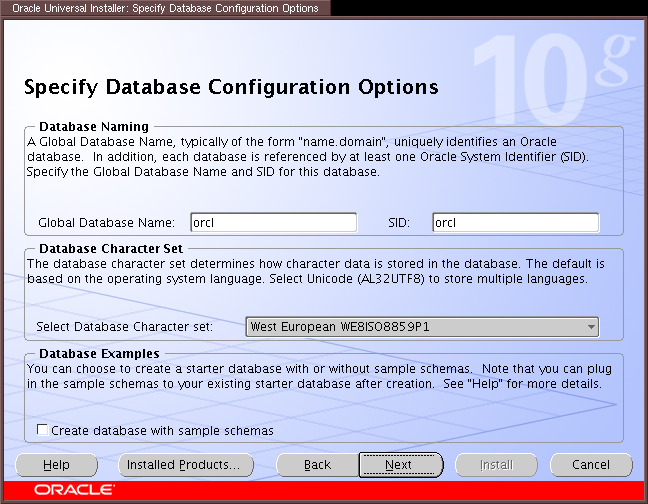

Defaults.

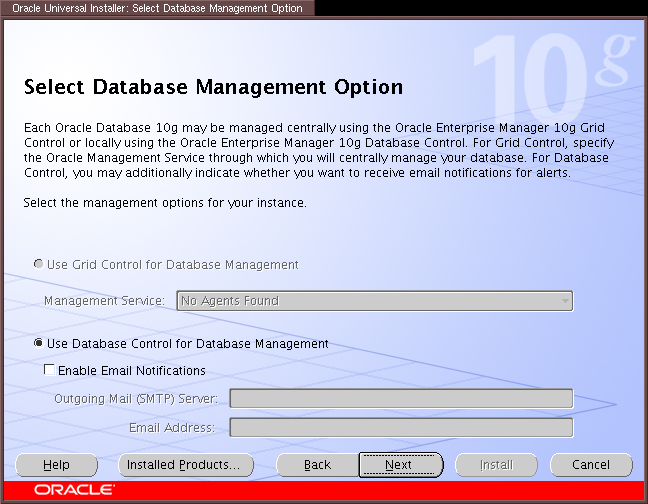

Defaults.

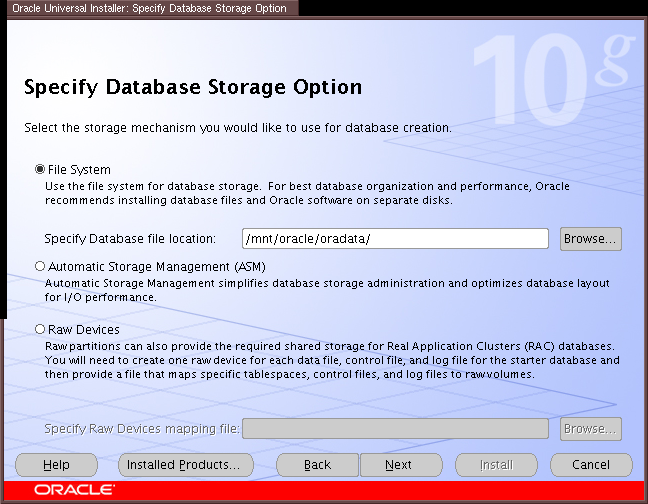

Defaults.

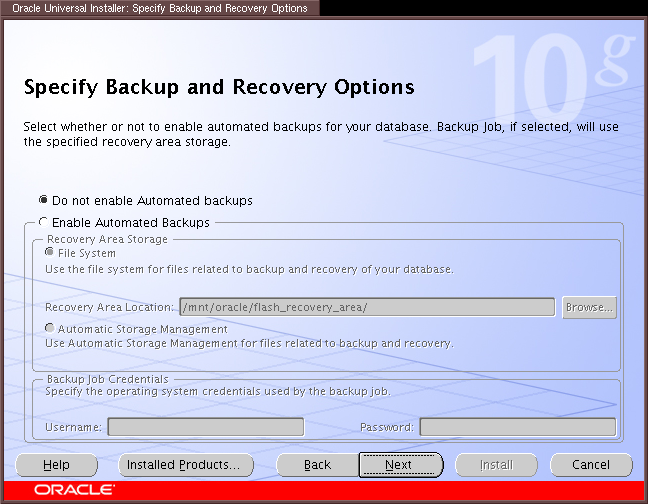

Defaults.

Defaults.

Defaults.

Specify passwords for administration accounts.

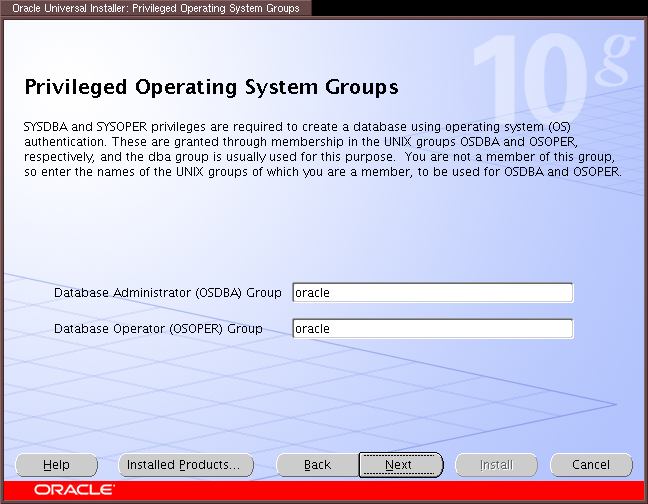

Make sure these match your OS group names.

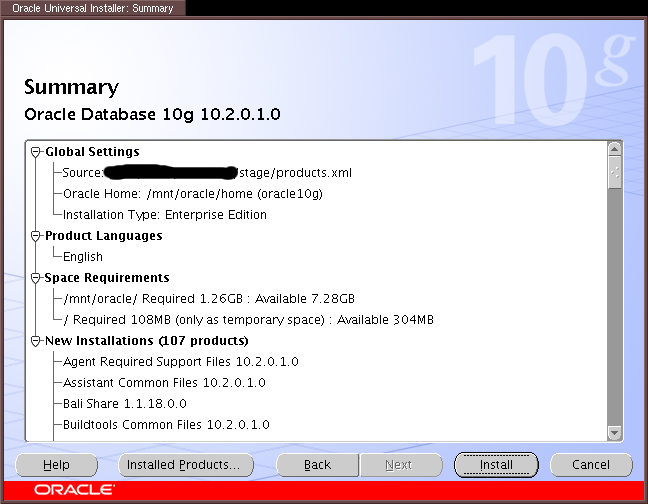

Ready to install.

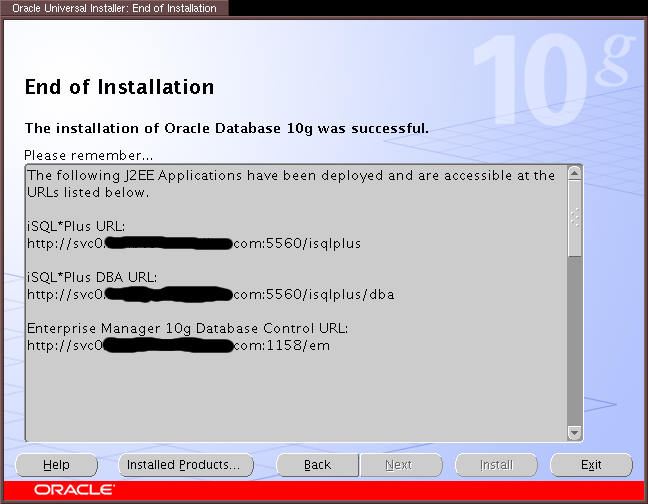

Installation complete.

Note: If installation fails for any reason, please consult Oracle's Documentation

http://svc0.foo.test.com:1158/em

Login: SYS

Password: (your password)

Connect As: SYSDBA

$ORACLE_HOME/network/admin/listener.ora from:

...

SID_LIST_LISTENER =

(SID_LIST =

(SID_DESC =

(SID_NAME = PLSExtProc)

(ORACLE_HOME = /mnt/oracle/home)

(PROGRAM = extproc)

)

)

...

to:

SID_LIST_LISTENER =

(SID_LIST =

(SID_DESC =

(GLOBAL_DBNAME = svc0.foo.test.com)

(ORACLE_HOME = /mnt/oracle/home)

(SID_NAME = orcl)

)

(SID_DESC =

(SID_NAME = PLSExtProc)

(ORACLE_HOME = /mnt/oracle/home)

(PROGRAM = extproc)

)

)

Failure to do this meant I could not use the Enterprise Manager to check

the status of the database.

<service domain="redpref" name="oracle10g"> <fs device="/dev/sdb7" force_unmount="1" fstype="ext3" mountpoint="/mnt/oracle" name="Oracle Mount"/> <oracledb name="orcl" user="oracle" home="/mnt/oracle/home" type="10g" vhost="svc0.foo.test.com"/> <ip address="192.168.1.20" /> </service>

If you prefer the script interface (so that you can add the Oracle script with the GUI), you need to customize ORACLE_HOME, ORACLE_USER, ORACLE_SID, ORACLE_LOCKFILE, and ORACLE_TYPE to match your environment. If you do it this way, the service should look like this:

<service domain="redpref" name="oracle10g"> <fs device="/dev/sdb7" force_unmount="1" fstype="ext3" mountpoint="/mnt/oracle" name="Oracle Mount"/> <script name="oracle10g" path="/path/to/oracledb.sh"/> <ip address="192.168.1.20" /> </service>(with the <oracledb> line changed to a <script> line if you used the script method...)

[root@cyan ~]# rg_test test /etc/cluster/cluster.conf stop \ service oracle10g Running in test mode. Stopping oracle10g... Restarting /usr/share/cluster/oracledb.sh as oracle. Stopping Oracle EM DB Console: [ OK ] Stopping iSQL*Plus: [ OK ] Stopping Oracle Database: [ OK ] Stopping Oracle Listener: [ OK ] Waiting for all Oracle processes to exit: [ OK ] <info> Removing IPv4 address 192.168.1.20/22 from eth0 <info> unmounting /mnt/oracle Stop of oracle10g complete

[root@cyan home]# rg_test test /etc/cluster/cluster.conf \ start service oracle10g Running in test mode. Starting oracle10g... <info> mounting /dev/sdb7 on /mnt/oracle <debug> mount -t ext3 /dev/sdb7 /mnt/oracle <debug> Link for eth0: Detected <info> Adding IPv4 address 192.168.1.20/22 to eth0 <debug> Sending gratuitous ARP: 192.168.1.20 00:02:55:54:28:6c \ brd ff:ff:ff:ff... Restarting /usr/share/cluster/oracledb.sh as oracle. Starting Oracle Database: [ OK ] Starting Oracle Listener: [ OK ] Starting iSQL*Plus: [ OK ] Starting Oracle EM DB Console: [ OK ] Start of oracle10g complete