Abstract

Dustin L. Black, RHCA

Let’s Talk Distributed Storage

-

Decentralize and Limit Failure Points

-

Scale with Commodity Hardware and Familiar Operating Environments

-

Reduce Dependence on Specialized Technologies and Skills

Gluster

-

Clustered Scale-out General Purpose Storage Platform

-

Fundamentally File-Based & POSIX End-to-End

-

Familiar Filesystems Underneath (EXT4, XFS, BTRFS)

-

Familiar Client Access (NFS, Samba, Fuse)

-

-

No Metadata Server

-

Standards-Based – Clients, Applications, Networks

-

Modular Architecture for Scale and Functionality

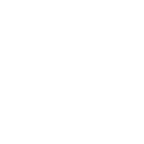

Gluster Stack

Gluster Architecture

Ceph

-

Massively scalable, software-defined storage system

-

Commodity hardware with no single point of failure

-

Self-healing and Self-managing

-

Rack and data center aware

-

Automatic distribution of replicas

-

-

Block, Object, File

-

Data stored on common backend filesystems (EXT4, XFS, etc.)

-

Fundamentally distributed as objects via RADOS

-

Client access via RBD, RADOS Gateway, and Ceph Filesystem

-

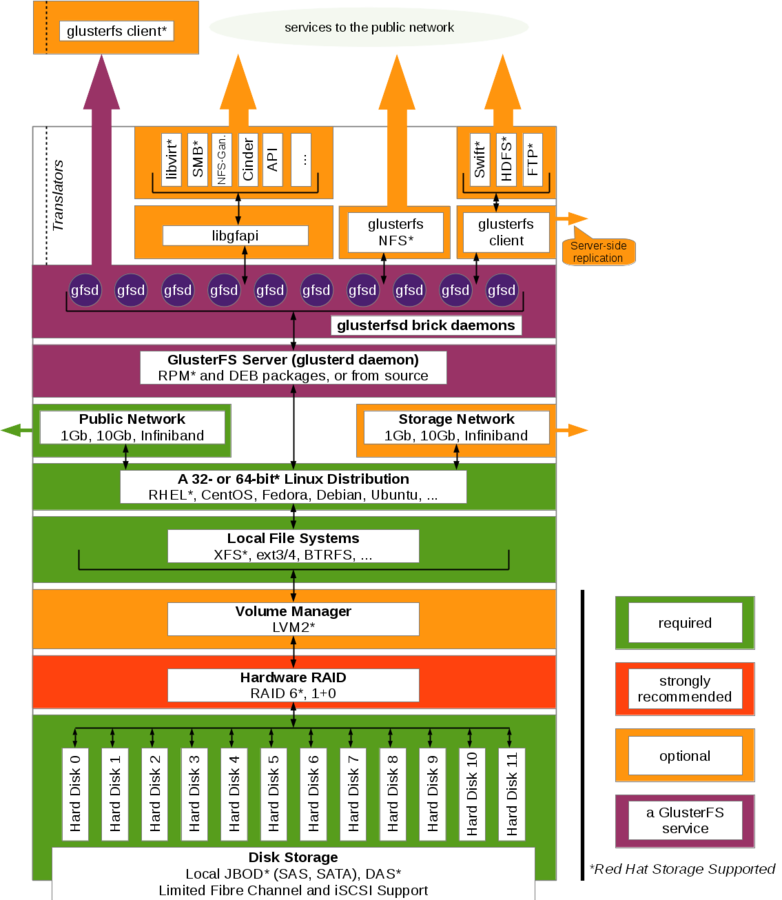

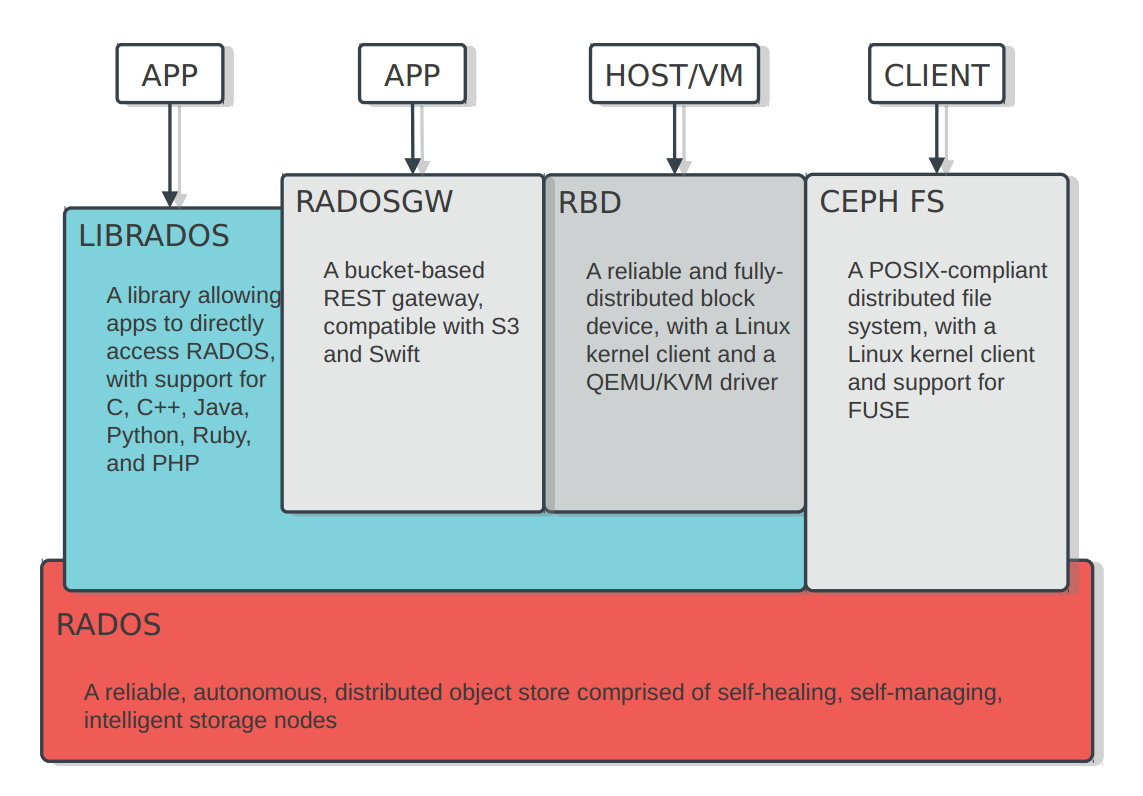

Ceph Stack

Ceph Architecture

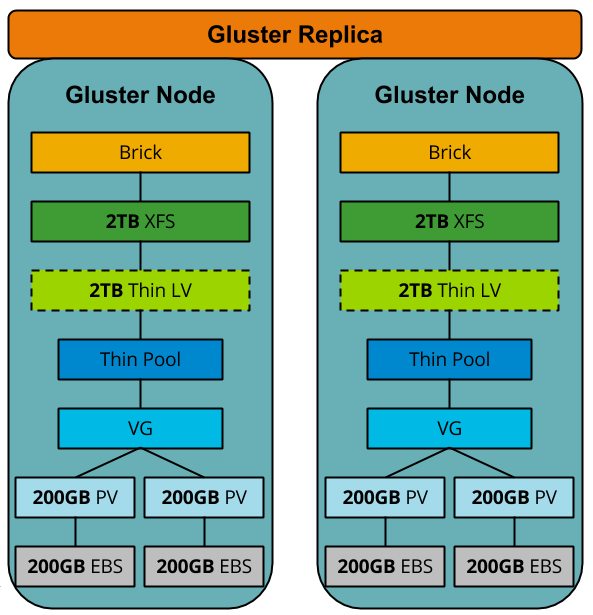

Gluster Use Case: Client File Services in AWS

Challenge

-

Isolated customers for web portal SaaS

-

POSIX filesystem requirement

-

Efficient elastic provisioning of EBS

-

2PB provisioned; only 20% utilized

-

-

Two backup tiers

-

Self-service & Disaster recovery

-

-

Flexibility to scale up and out on-demand

Implementation

-

Lorem ipsum

Diagram

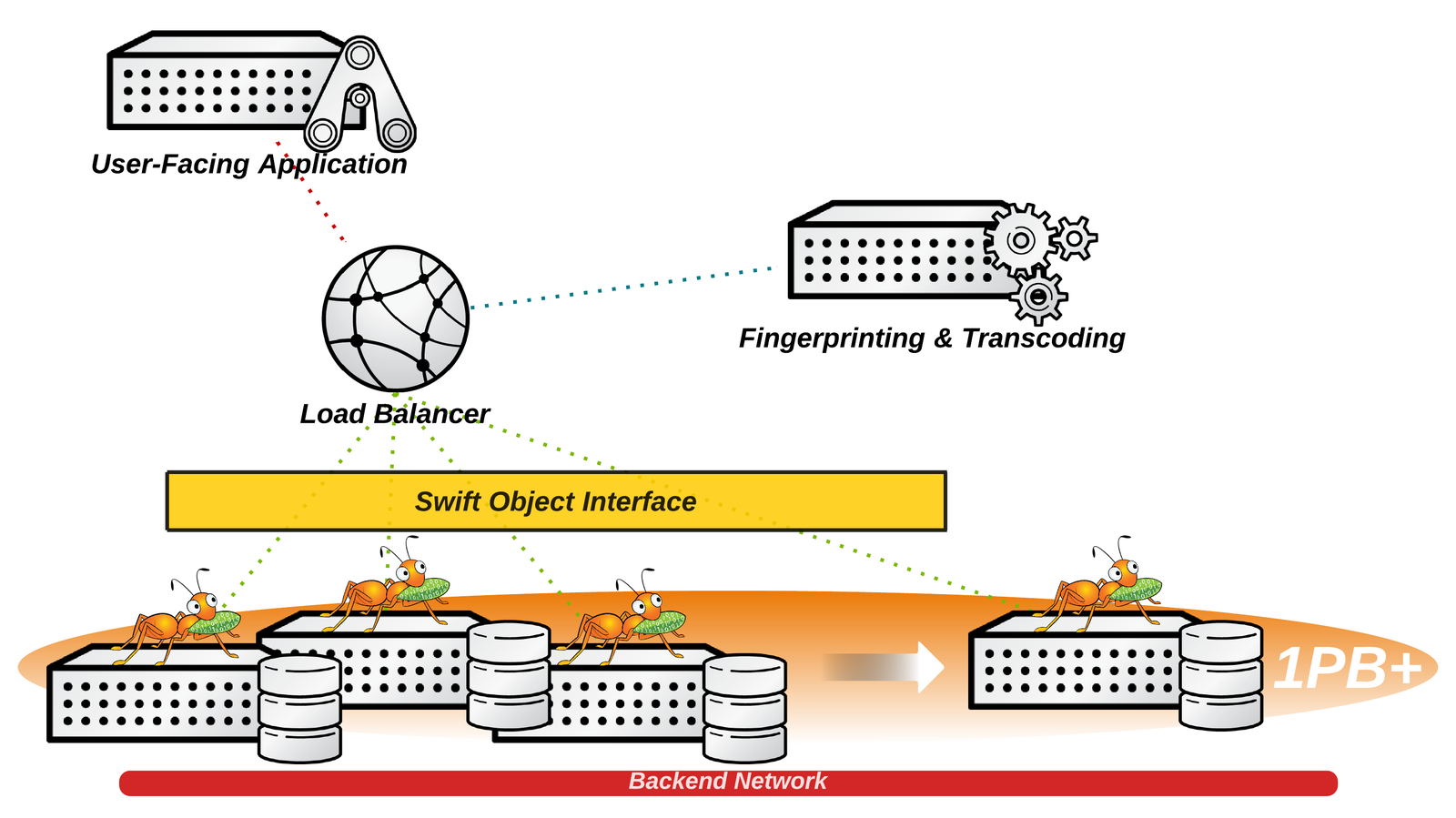

Gluster Use Case: Media Object Storage

Challenge

-

Media file storage for customer-facing app

-

Drop-in replacement for legacy object backend

-

1PB plus 1TB/day growth rate

-

Minimal resistance to increasing scale

-

Multi-protocol capable for future services

-

Fast transactions for fingerprinting and transcoding

Implementation

-

12 Dell R710 nodes + MD1000/1200 DAS

-

Growth of 6 → 10 → 12 nodes

-

-

~1PB in total after RAID 6

-

GlusterFS Swift interface from OpenStack

-

Built-in file+object simultaneous access

-

Multi-GBit network with segregated backend

Diagram

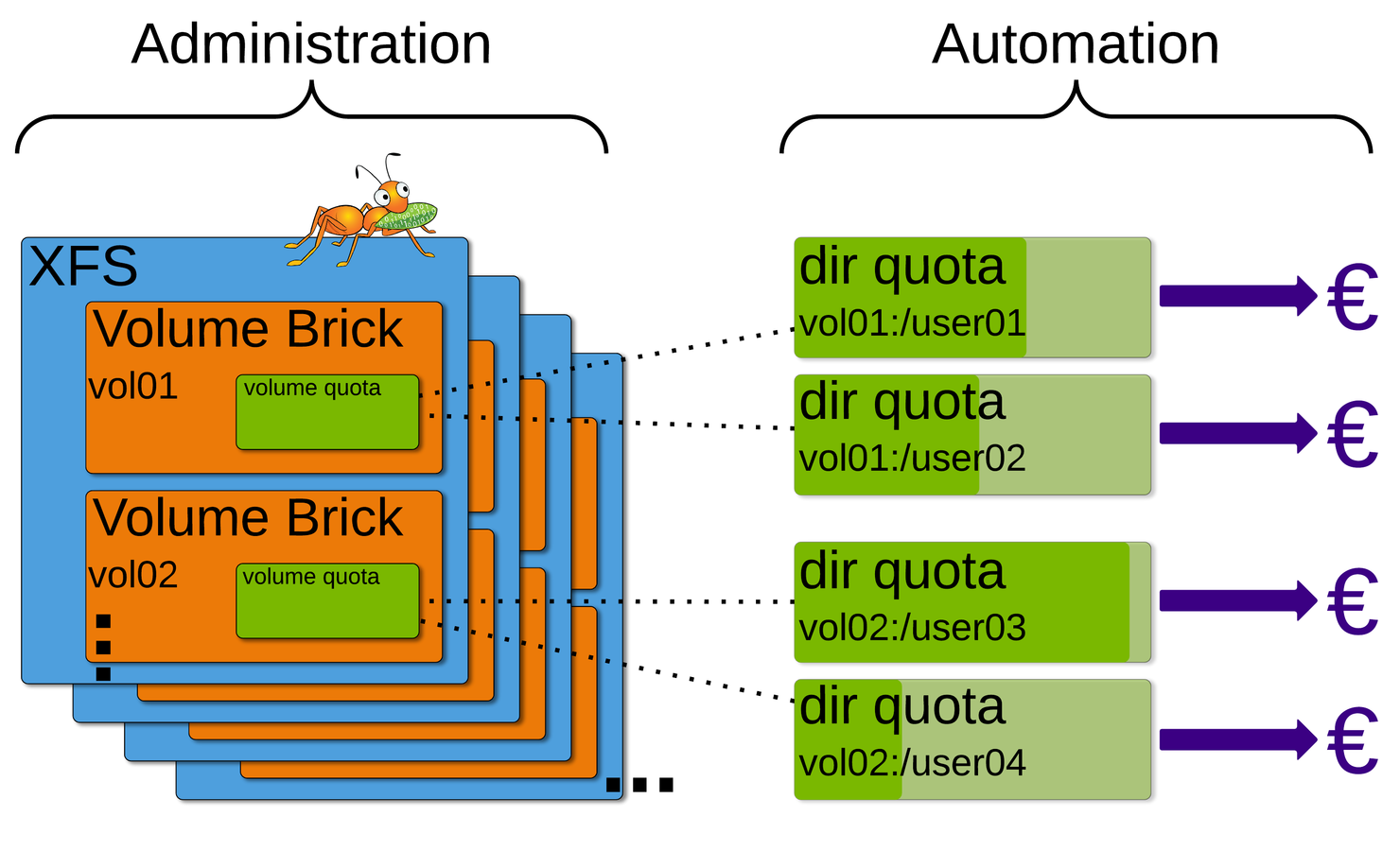

Gluster Use Case: Self-Service with Chargeback

Challenge

-

Add file storage provisioning to existing self-service virtualization environment

-

Automate the administrative tasks

-

-

Multi-tenancy

-

Subdivide and limit usage by corporate divisions and departments

-

Allow for over-provisioning

-

Create a charge-back model

-

-

Simple and transparent scaling

Implementation

-

Dell R510 nodes with local disk

-

~30TB per node as one XFS filesystem

-

Bricks are subdirectories of the parent filesystem

-

Volumes are therefore naturally over-provisioned

-

-

Quotas placed on volumes to limit usage and provide for accounting and charge-back

-

Only 4 gluster commands needed to allocate and limit a new volume; Easily automated

Diagram

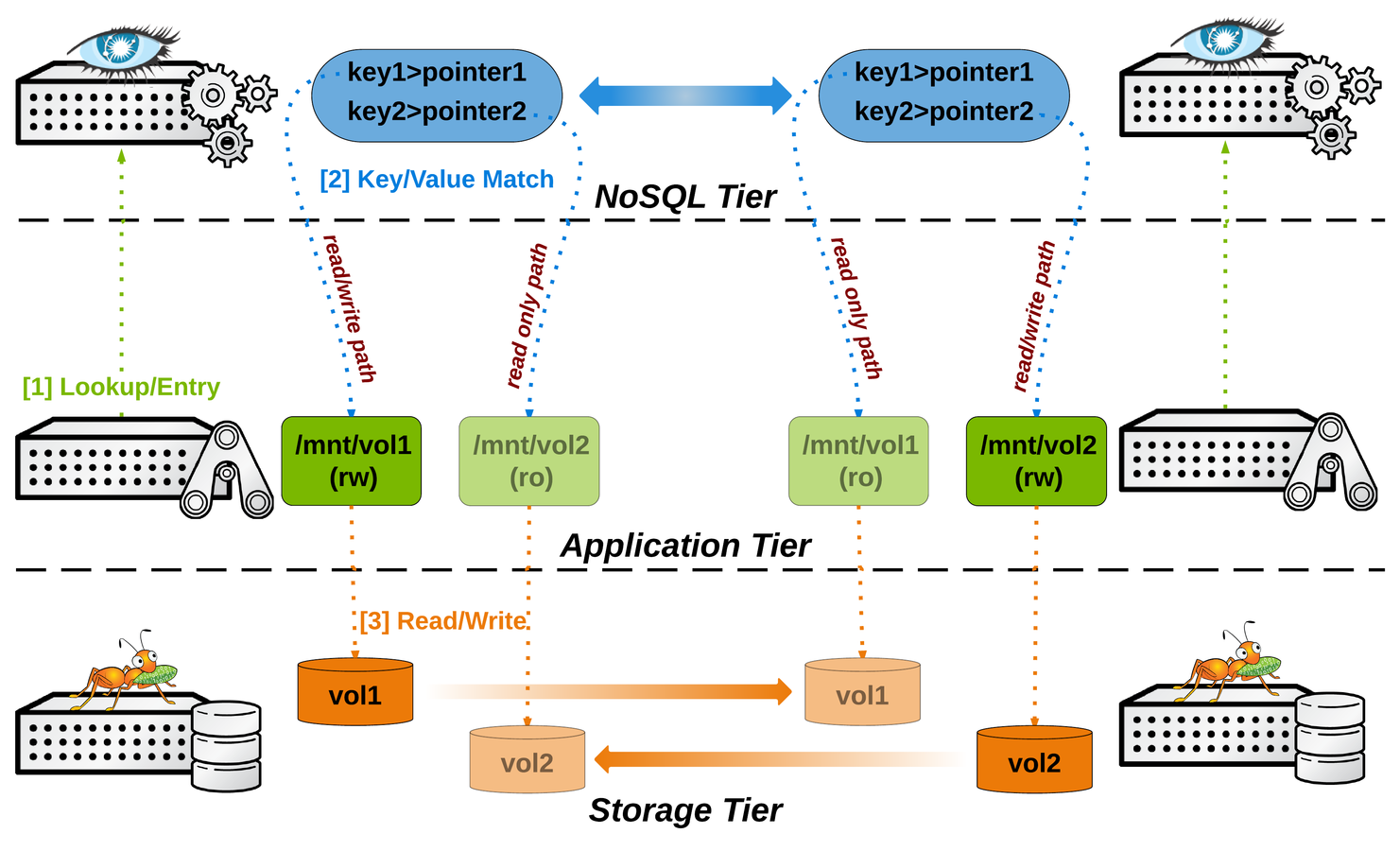

Gluster Use Case: NoSQL Backend with SLA-Bound Geo-Replication

Challenge

-

Replace legacy database key/blob architecture

-

Divide and conquer

-

NoSQL layer for key/pointer

-

Scalable storage layer for blob payload

-

-

Active/Active sites with 30-minute replication SLA

-

Performance tuned for small-file WORM patterns

Implementation

-

HP DL170e nodes with local disk

-

~4TB per node

-

Cassandra replicated NoSQL layer for key/pointer

-

GlusterFS parallel geo-replication for data payload site copy exceeding SLA standards

-

Worked with Red Hat Engineering to modify application data patterns for better small-file performance

Diagram

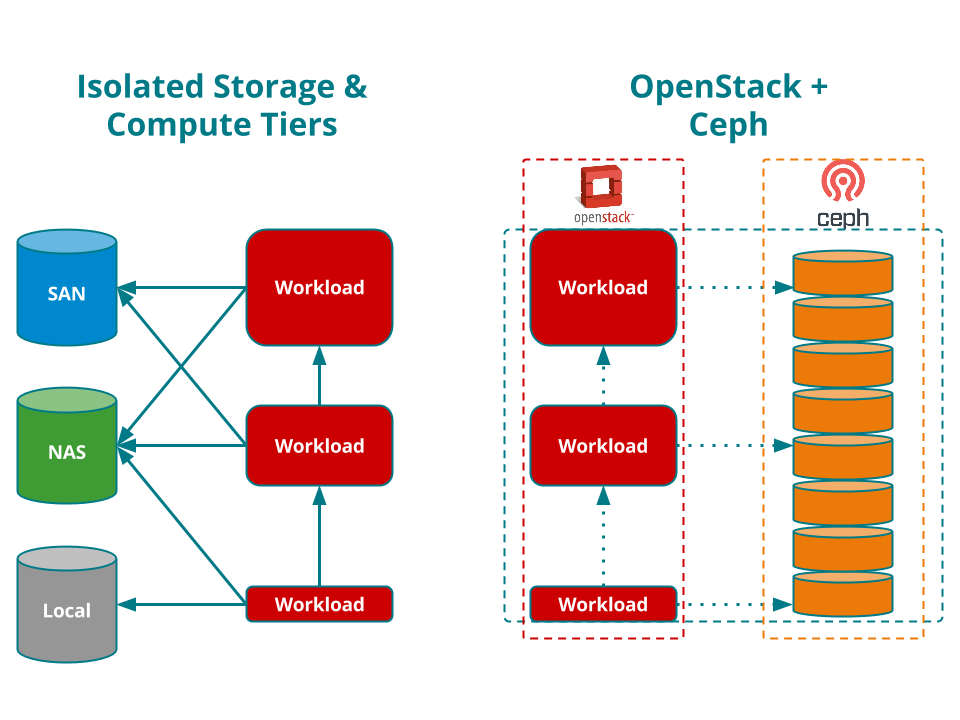

Ceph Use Case: Storage & Compute Consolidation for Scientific Research

Challenge

-

Scale with storage needs

-

Eliminate need to move data between backends

-

Keep pace with exponential demand

-

-

Reduce administrative overhead; Spend more time on the science

-

Control and predict costs

-

Scale on demand

-

Simple chargeback model

-

-

Efficient resource consumption

Implementation

-

Dell PowerEdge R720 Servers

-

OpenStack + Ceph

-

HPC and Storage on the same commodity hardware

-

Simple scaling, portability, and tracking for chargeback and expansion

-

-

400TB virtual storage pool

-

Ample unified storage on a flexible platform reduces administrative overhead

-

Diagram

Ceph Use Case: Multi-Petabyte Restful Object Store

Challenge

-

Object-based storage for thousands of cloud service customers

-

Seamlessly serve large media & backup files as well smaller payloads

-

Quick time-to-market and pain-free scalability

-

Highly cost-efficient with minimal proprietary reliance

-

Standards-based for simplified hybrid cloud deployments

Implementation

-

Modular server-rack-row "pod" system

-

6x Dell PowerEdge R515 servers per rack

-

10x 3TB disks per server; Total 216TB raw per rack

-

10x racks per row; Total 2.1PB raw per row

-

700TB triple-replicated customer objects

-

-

Leaf-Spine mesh network for scale-out without bottleneck

-

-

Ceph with RADOS Gateway

-

S3 & Swift access via RESTful APIs

-

Tiered storage pools for metadata, objects, and logs

-

-

Optimized Chef recipes for fast modular scaling

Questions?

Do it!

-

Build a test environment in VMs in just minutes!

-

Get the bits:

-

Fedora 23 has GlusterFS and Ceph packages natively

-

RHSS 3.1 ISO available on the Red Hat Portal

-

-

Go upstream: gluster.org / ceph.com

Thank You!

@RedHatOpen @Gluster @Ceph @dustinlblack #RedHat